diff --git a/.github/workflows/greetings.yml b/.github/workflows/greetings.yml

index 2c5c5c70..860c4a26 100644

--- a/.github/workflows/greetings.yml

+++ b/.github/workflows/greetings.yml

@@ -16,7 +16,7 @@ jobs:

-

-  +

+  To continue with this repo, please visit our [Custom Training Tutorial](https://github.com/ultralytics/yolov3/wiki/Train-Custom-Data) to get started, and see our [Google Colab Notebook](https://github.com/ultralytics/yolov3/blob/master/tutorial.ipynb), [Docker Image](https://hub.docker.com/r/ultralytics/yolov3), and [GCP Quickstart Guide](https://github.com/ultralytics/yolov3/wiki/GCP-Quickstart) for example environments.

@@ -27,4 +27,4 @@ jobs:

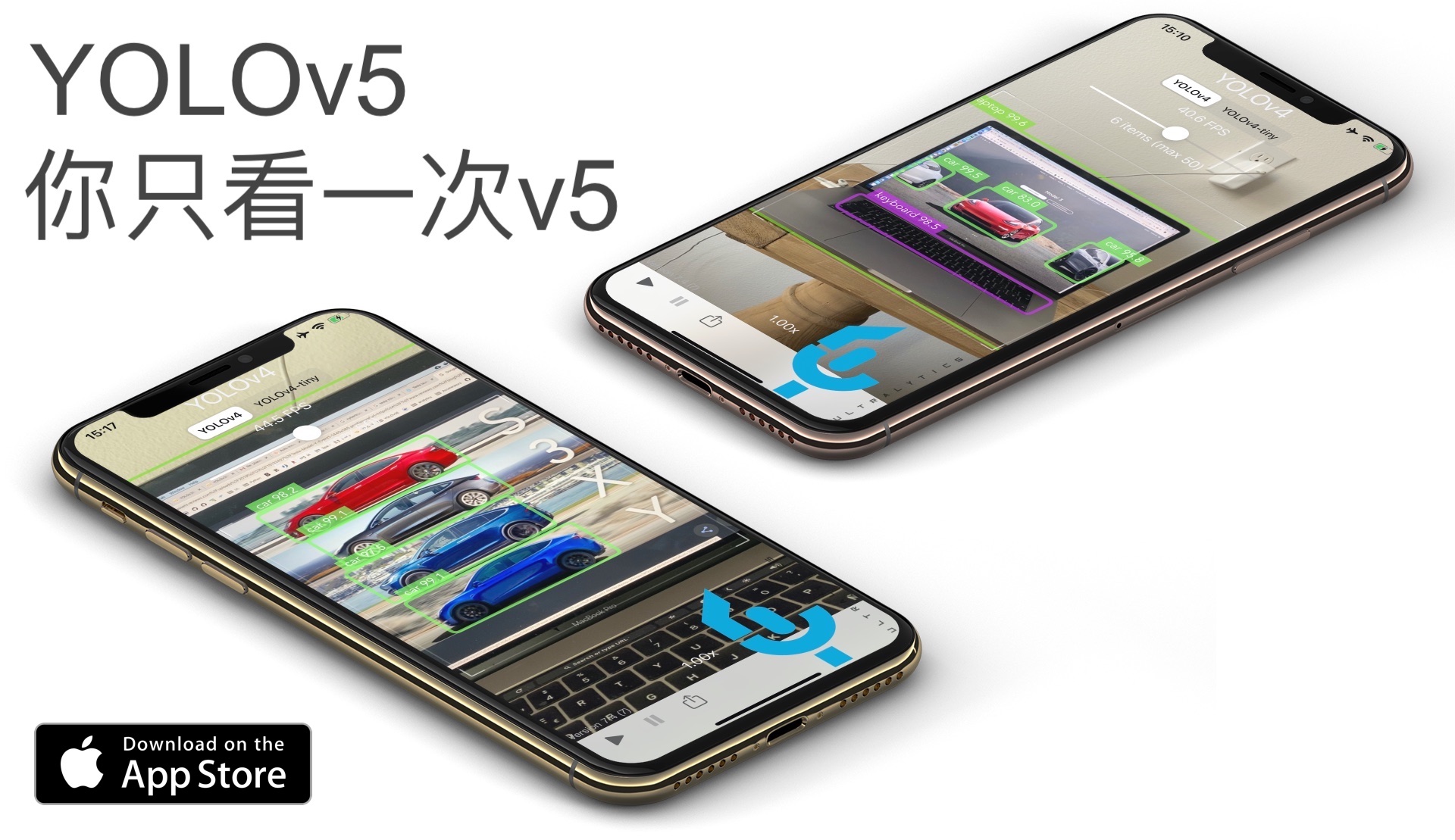

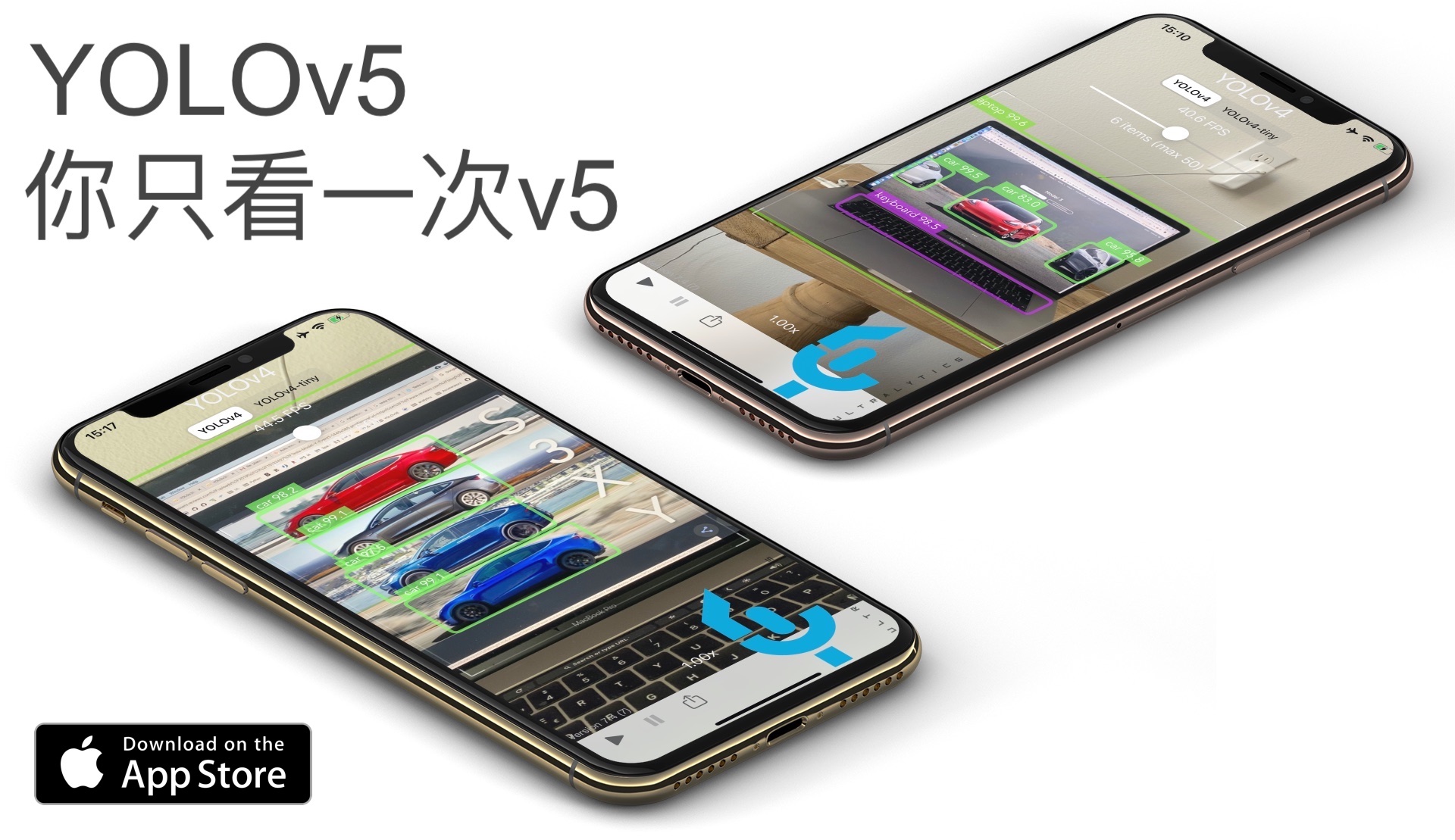

- **Edge AI** integrated into custom iOS and Android apps for realtime **30 FPS video inference.**

- **Custom data training**, hyperparameter evolution, and model exportation to any destination.

- For more information please visit https://www.ultralytics.com.

\ No newline at end of file

+ For more information please visit https://www.ultralytics.com.

diff --git a/models.py b/models.py

index 0323b25e..d5cfc02a 100755

--- a/models.py

+++ b/models.py

@@ -438,7 +438,7 @@ def convert(cfg='cfg/yolov3-spp.cfg', weights='weights/yolov3-spp.weights'):

target = weights.rsplit('.', 1)[0] + '.pt'

torch.save(chkpt, target)

- print("Success: converted '%s' to 's%'" % (weights, target))

+ print("Success: converted '%s' to '%s'" % (weights, target))

else:

print('Error: extension not supported.')

diff --git a/requirements.txt b/requirements.txt

index 08c696bb..7b631ebe 100755

--- a/requirements.txt

+++ b/requirements.txt

@@ -3,6 +3,7 @@

numpy == 1.17

opencv-python >= 4.1

torch >= 1.5

+torchvision

matplotlib

pycocotools

tqdm

diff --git a/train.py b/train.py

index ca99daca..705b5198 100644

--- a/train.py

+++ b/train.py

@@ -143,6 +143,15 @@ def train(hyp):

elif len(weights) > 0: # darknet format

# possible weights are '*.weights', 'yolov3-tiny.conv.15', 'darknet53.conv.74' etc.

load_darknet_weights(model, weights)

+

+ if opt.freeze_layers:

+ output_layer_indices = [idx - 1 for idx, module in enumerate(model.module_list) if isinstance(module, YOLOLayer)]

+ freeze_layer_indices = [x for x in range(len(model.module_list)) if

+ (x not in output_layer_indices) and

+ (x - 1 not in output_layer_indices)]

+ for idx in freeze_layer_indices:

+ for parameter in model.module_list[idx].parameters():

+ parameter.requires_grad_(False)

# Mixed precision training https://github.com/NVIDIA/apex

if mixed_precision:

@@ -394,6 +403,7 @@ if __name__ == '__main__':

parser.add_argument('--device', default='', help='device id (i.e. 0 or 0,1 or cpu)')

parser.add_argument('--adam', action='store_true', help='use adam optimizer')

parser.add_argument('--single-cls', action='store_true', help='train as single-class dataset')

+ parser.add_argument('--freeze-layers', action='store_true', help='Freeze non-output layers')

opt = parser.parse_args()

opt.weights = last if opt.resume else opt.weights

check_git_status()

To continue with this repo, please visit our [Custom Training Tutorial](https://github.com/ultralytics/yolov3/wiki/Train-Custom-Data) to get started, and see our [Google Colab Notebook](https://github.com/ultralytics/yolov3/blob/master/tutorial.ipynb), [Docker Image](https://hub.docker.com/r/ultralytics/yolov3), and [GCP Quickstart Guide](https://github.com/ultralytics/yolov3/wiki/GCP-Quickstart) for example environments.

@@ -27,4 +27,4 @@ jobs:

- **Edge AI** integrated into custom iOS and Android apps for realtime **30 FPS video inference.**

- **Custom data training**, hyperparameter evolution, and model exportation to any destination.

- For more information please visit https://www.ultralytics.com.

\ No newline at end of file

+ For more information please visit https://www.ultralytics.com.

diff --git a/models.py b/models.py

index 0323b25e..d5cfc02a 100755

--- a/models.py

+++ b/models.py

@@ -438,7 +438,7 @@ def convert(cfg='cfg/yolov3-spp.cfg', weights='weights/yolov3-spp.weights'):

target = weights.rsplit('.', 1)[0] + '.pt'

torch.save(chkpt, target)

- print("Success: converted '%s' to 's%'" % (weights, target))

+ print("Success: converted '%s' to '%s'" % (weights, target))

else:

print('Error: extension not supported.')

diff --git a/requirements.txt b/requirements.txt

index 08c696bb..7b631ebe 100755

--- a/requirements.txt

+++ b/requirements.txt

@@ -3,6 +3,7 @@

numpy == 1.17

opencv-python >= 4.1

torch >= 1.5

+torchvision

matplotlib

pycocotools

tqdm

diff --git a/train.py b/train.py

index ca99daca..705b5198 100644

--- a/train.py

+++ b/train.py

@@ -143,6 +143,15 @@ def train(hyp):

elif len(weights) > 0: # darknet format

# possible weights are '*.weights', 'yolov3-tiny.conv.15', 'darknet53.conv.74' etc.

load_darknet_weights(model, weights)

+

+ if opt.freeze_layers:

+ output_layer_indices = [idx - 1 for idx, module in enumerate(model.module_list) if isinstance(module, YOLOLayer)]

+ freeze_layer_indices = [x for x in range(len(model.module_list)) if

+ (x not in output_layer_indices) and

+ (x - 1 not in output_layer_indices)]

+ for idx in freeze_layer_indices:

+ for parameter in model.module_list[idx].parameters():

+ parameter.requires_grad_(False)

# Mixed precision training https://github.com/NVIDIA/apex

if mixed_precision:

@@ -394,6 +403,7 @@ if __name__ == '__main__':

parser.add_argument('--device', default='', help='device id (i.e. 0 or 0,1 or cpu)')

parser.add_argument('--adam', action='store_true', help='use adam optimizer')

parser.add_argument('--single-cls', action='store_true', help='train as single-class dataset')

+ parser.add_argument('--freeze-layers', action='store_true', help='Freeze non-output layers')

opt = parser.parse_args()

opt.weights = last if opt.resume else opt.weights

check_git_status()

-

-  +

+  To continue with this repo, please visit our [Custom Training Tutorial](https://github.com/ultralytics/yolov3/wiki/Train-Custom-Data) to get started, and see our [Google Colab Notebook](https://github.com/ultralytics/yolov3/blob/master/tutorial.ipynb), [Docker Image](https://hub.docker.com/r/ultralytics/yolov3), and [GCP Quickstart Guide](https://github.com/ultralytics/yolov3/wiki/GCP-Quickstart) for example environments.

@@ -27,4 +27,4 @@ jobs:

- **Edge AI** integrated into custom iOS and Android apps for realtime **30 FPS video inference.**

- **Custom data training**, hyperparameter evolution, and model exportation to any destination.

- For more information please visit https://www.ultralytics.com.

\ No newline at end of file

+ For more information please visit https://www.ultralytics.com.

diff --git a/models.py b/models.py

index 0323b25e..d5cfc02a 100755

--- a/models.py

+++ b/models.py

@@ -438,7 +438,7 @@ def convert(cfg='cfg/yolov3-spp.cfg', weights='weights/yolov3-spp.weights'):

target = weights.rsplit('.', 1)[0] + '.pt'

torch.save(chkpt, target)

- print("Success: converted '%s' to 's%'" % (weights, target))

+ print("Success: converted '%s' to '%s'" % (weights, target))

else:

print('Error: extension not supported.')

diff --git a/requirements.txt b/requirements.txt

index 08c696bb..7b631ebe 100755

--- a/requirements.txt

+++ b/requirements.txt

@@ -3,6 +3,7 @@

numpy == 1.17

opencv-python >= 4.1

torch >= 1.5

+torchvision

matplotlib

pycocotools

tqdm

diff --git a/train.py b/train.py

index ca99daca..705b5198 100644

--- a/train.py

+++ b/train.py

@@ -143,6 +143,15 @@ def train(hyp):

elif len(weights) > 0: # darknet format

# possible weights are '*.weights', 'yolov3-tiny.conv.15', 'darknet53.conv.74' etc.

load_darknet_weights(model, weights)

+

+ if opt.freeze_layers:

+ output_layer_indices = [idx - 1 for idx, module in enumerate(model.module_list) if isinstance(module, YOLOLayer)]

+ freeze_layer_indices = [x for x in range(len(model.module_list)) if

+ (x not in output_layer_indices) and

+ (x - 1 not in output_layer_indices)]

+ for idx in freeze_layer_indices:

+ for parameter in model.module_list[idx].parameters():

+ parameter.requires_grad_(False)

# Mixed precision training https://github.com/NVIDIA/apex

if mixed_precision:

@@ -394,6 +403,7 @@ if __name__ == '__main__':

parser.add_argument('--device', default='', help='device id (i.e. 0 or 0,1 or cpu)')

parser.add_argument('--adam', action='store_true', help='use adam optimizer')

parser.add_argument('--single-cls', action='store_true', help='train as single-class dataset')

+ parser.add_argument('--freeze-layers', action='store_true', help='Freeze non-output layers')

opt = parser.parse_args()

opt.weights = last if opt.resume else opt.weights

check_git_status()

To continue with this repo, please visit our [Custom Training Tutorial](https://github.com/ultralytics/yolov3/wiki/Train-Custom-Data) to get started, and see our [Google Colab Notebook](https://github.com/ultralytics/yolov3/blob/master/tutorial.ipynb), [Docker Image](https://hub.docker.com/r/ultralytics/yolov3), and [GCP Quickstart Guide](https://github.com/ultralytics/yolov3/wiki/GCP-Quickstart) for example environments.

@@ -27,4 +27,4 @@ jobs:

- **Edge AI** integrated into custom iOS and Android apps for realtime **30 FPS video inference.**

- **Custom data training**, hyperparameter evolution, and model exportation to any destination.

- For more information please visit https://www.ultralytics.com.

\ No newline at end of file

+ For more information please visit https://www.ultralytics.com.

diff --git a/models.py b/models.py

index 0323b25e..d5cfc02a 100755

--- a/models.py

+++ b/models.py

@@ -438,7 +438,7 @@ def convert(cfg='cfg/yolov3-spp.cfg', weights='weights/yolov3-spp.weights'):

target = weights.rsplit('.', 1)[0] + '.pt'

torch.save(chkpt, target)

- print("Success: converted '%s' to 's%'" % (weights, target))

+ print("Success: converted '%s' to '%s'" % (weights, target))

else:

print('Error: extension not supported.')

diff --git a/requirements.txt b/requirements.txt

index 08c696bb..7b631ebe 100755

--- a/requirements.txt

+++ b/requirements.txt

@@ -3,6 +3,7 @@

numpy == 1.17

opencv-python >= 4.1

torch >= 1.5

+torchvision

matplotlib

pycocotools

tqdm

diff --git a/train.py b/train.py

index ca99daca..705b5198 100644

--- a/train.py

+++ b/train.py

@@ -143,6 +143,15 @@ def train(hyp):

elif len(weights) > 0: # darknet format

# possible weights are '*.weights', 'yolov3-tiny.conv.15', 'darknet53.conv.74' etc.

load_darknet_weights(model, weights)

+

+ if opt.freeze_layers:

+ output_layer_indices = [idx - 1 for idx, module in enumerate(model.module_list) if isinstance(module, YOLOLayer)]

+ freeze_layer_indices = [x for x in range(len(model.module_list)) if

+ (x not in output_layer_indices) and

+ (x - 1 not in output_layer_indices)]

+ for idx in freeze_layer_indices:

+ for parameter in model.module_list[idx].parameters():

+ parameter.requires_grad_(False)

# Mixed precision training https://github.com/NVIDIA/apex

if mixed_precision:

@@ -394,6 +403,7 @@ if __name__ == '__main__':

parser.add_argument('--device', default='', help='device id (i.e. 0 or 0,1 or cpu)')

parser.add_argument('--adam', action='store_true', help='use adam optimizer')

parser.add_argument('--single-cls', action='store_true', help='train as single-class dataset')

+ parser.add_argument('--freeze-layers', action='store_true', help='Freeze non-output layers')

opt = parser.parse_args()

opt.weights = last if opt.resume else opt.weights

check_git_status()